Hier finden Sie alle wichtigen Informationen über unser Unternehmen und unsere digitalen Dienstleistungen. Unser Fact Sheet gibt Ihnen einen schnellen Überblick über unsere Kompetenzen, Erfahrungen und Leistungen.

Fact Sheet herunterladen (PDF)Welcome to Macaw

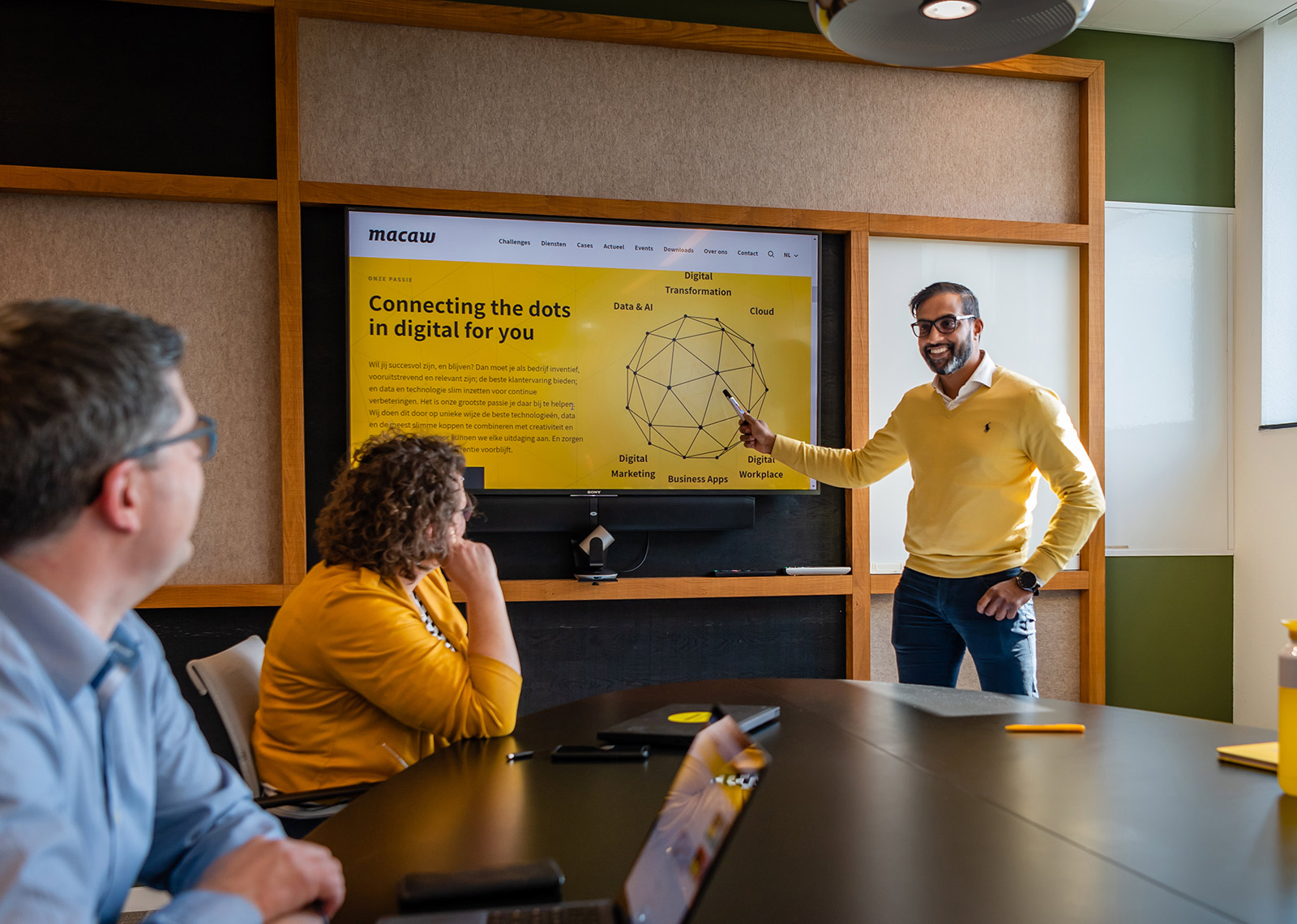

Connecting the dots in digital for you

Unsere Services

Eine einzigartige Kombination aus einem Systemintegrator und einer Digitalagentur. Dank unseres breiten Leistungsspektrums sind wir in der Lage, jede Herausforderung anzunehmen und zum Erfolg Ihrer Organisation beizutragen.

Was auch immer Ihre Herausforderung ist, wir bieten die beste Lösung.

Digital Marketing & Sales

Digital Marketing & Sales

Blogs & News

Arbeiten bei Macaw

Microsoft & Macaw: Strategische Partner für die digitale Transformation

Sind Sie auf der Suche nach einem strategischen Partner, der innovativ ist und bereit, in andere Richtungen zu denken? Dann sind Sie bei Macaw an der richtigen Adresse. Unsere zertifizierten Mitarbeiter engagieren sich bereits seit Jahren dafür, Unternehmen aus dem Finanz- und Geschäftsdienstleistungssektor und der verarbeitenden Industrie noch erfolgreicher zu machen. Wir sind das Bindeglied zwischen Microsoft und Ihrem Unternehmen und setzen die Lösung in „Business Value“ um.

Sitecore & Macaw: Platin Implementierungspartner

Sie suchen einen erfahrenen, engagierten und erfolgreichen Sitecore Partner? Macaw ist erfahrener Sitecore Platin Implementierungspartner mit einer starken internationalen Erfolgsbilanz für Kunden wie Heineken, Schmitz Cargobull, Stadtwerke Krefeld und und viele mehr. Sitecore hat Macaw zum Sitecore Platin Implementierungspartner ernannt: den höchstmöglichen Partnerstatus! Somit bestätigt Sitecore die Expertise von Macaw im Bereich digitales Marketing, E-Commerce, Marketing-Automatisierung und Marketing-Intelligenz.